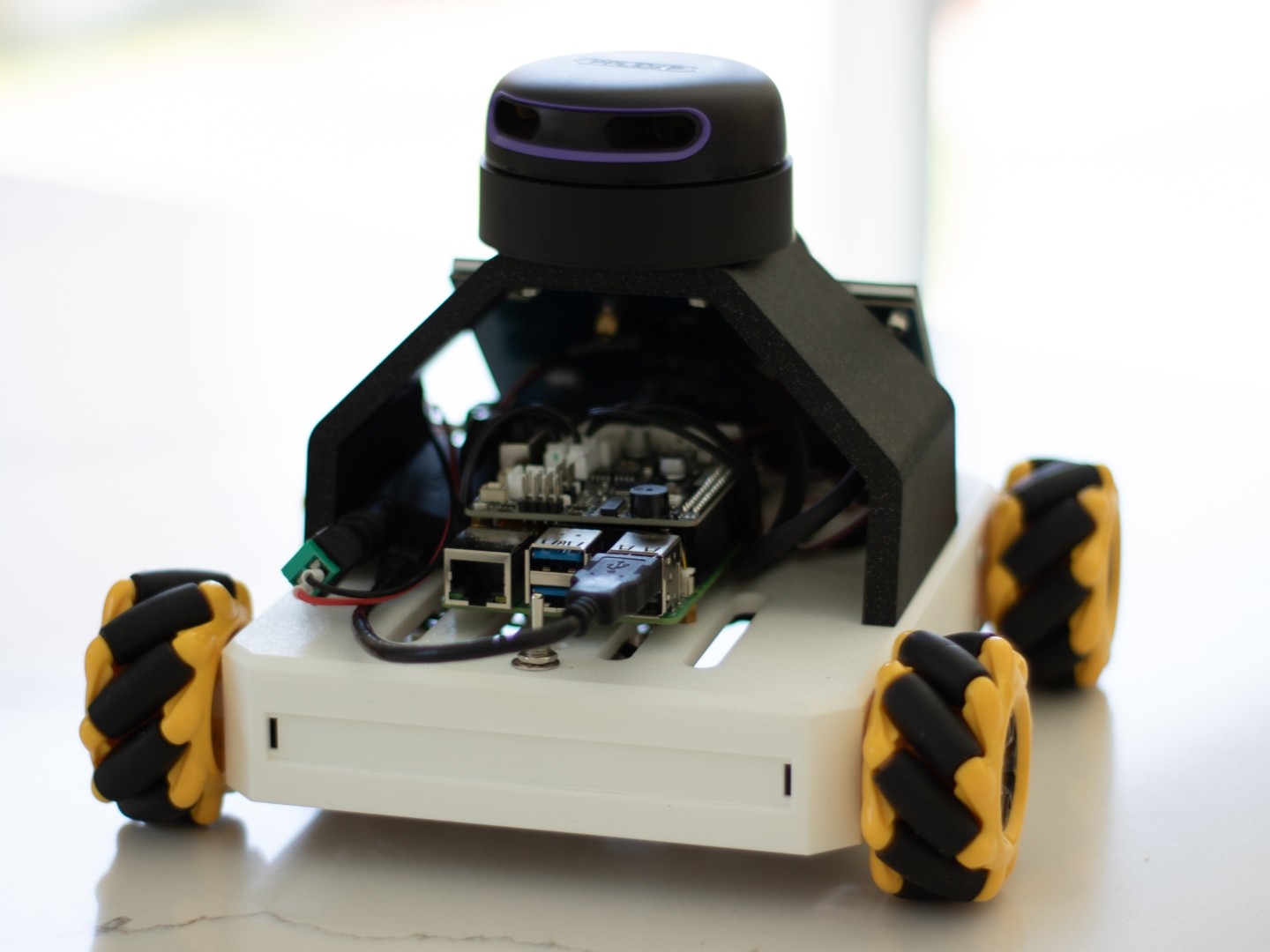

Wayfinder Autonomous Mapping Robot

An omni-directional indoor robot capable of real-time SLAM mapping and autonomous navigation.

Tools/Technologies: Raspberry Pi 5, Python, C, BreezySLAM, LiDAR (RPLidar A2M12), IMU (9-DOF), Fusion 360 CAD, 3D printing, PyQt6, Custom Power System

Date: Spring 2025

Overview

Wayfinder is an autonomous, holonomic robot designed to explore and map indoor environments using SLAM, 360° LiDAR, and real-time obstacle avoidance. The system integrates mecanum-wheel mobility, onboard computation, and custom GUI visualization to generate live maps without human intervention. The project addresses common challenges in indoor navigation—limited sensing, inconsistent lighting, and lack of GPS—by combining LiDAR-based perception with robust control logic and a fully integrated power and sensing platform.

My Role

- Designed and modeled all mechanical components and mounts in Fusion 360, including the chassis redesign and sensor integration.

- Developed the SLAM mapping integration using BreezySLAM and custom Python logic.

- Implemented LiDAR and IMU components, utilized a pipeline written in C, for stable LiDAR operation.

- Created the PyQt6 GUI, supporting live map rendering, robot status, and operator controls.

- Designed and implemented the complete power system, including battery layout, and wiring.

- Built and debugged the robot’s autonomous navigation logic, including wall-following, state-based behaviors, and recovery routines.

- Led troubleshooting efforts around power instability, IMU failure, and LiDAR read errors.

- Worked collaboratively with a teammate on testing, integration, and documentation.

Technical Challenges

-

Unstable LiDAR readouts

Caused by USB brownouts and reflective surfaces, solved by reworking power delivery, increasing USB current limits, and adjusting environmental factors. -

IMU drift and hardware failure

Required sourcing a new IMU and rewriting the fusion/integration layer to stabilize heading and improve dead-reckoning accuracy. -

Battery current limitations

The initial dual-18650 design could not supply the required current under load. A redesigned chassis doubled capacity and resolved instability. -

Navigation logic instability

Early Dijkstra-based navigation was unreliable due to inconsistent sensor inputs. The entire movement subsystem and wall-following logic were rewritten from scratch.

Key Design Decisions

- Holonomic mecanum-drive base for precise lateral and diagonal movement in tight indoor environments.

- Shift from ROS2 to native Python to eliminate hardware–OS compatibility issues and allow faster development.

- Chassis redesign with expanded battery capacity to increase runtime and stabilize LiDAR and IMU operation.

- PyQt6 real-time GUI for intuitive operation, debugging, and live mapping feedback.

- Adoption of BreezySLAM for efficient real-time mapping without ROS dependencies.

Results / Outcomes

- Achieved live SLAM mapping displayed directly on the touchscreen GUI.

- Demonstrated autonomous indoor navigation, obstacle avoidance, and exploration behaviors.

- Validated the new power system and stabilized sensor performance under real-world conditions.

- Identified improvement areas (LiDAR reflectivity, IMU drift, mapping precision) to guide future iterations.

- Gained extensive hands-on experience integrating perception, control, power, and software into a unified robotic system.

Visuals / Media

(Image names can be adjusted to match your media filenames.)